SIDIRE: Synthetic Image Dataset for Illumination Robustness Evaluation

SIDIRE is a freely available image dataset which provides synthetically generated images allowing to investigate the influence of illumination changes on object appearance. The images are renderings of 3D coin models with different material BRDFs and levels of texturedness. Thus, the dataset makes it possible to directly evaluate the influence of these conditions on the performance of image recognition without introducing a bias due to different objects used between image sets. The dataset has been used for evaluation in [1].

Download and Use

The dataset is now available at Zenodo:

The dataset is freely available for non-commercial research use. Please cite our paper [1] when using the dataset for your research.

Technical Details

Full Image Dataset

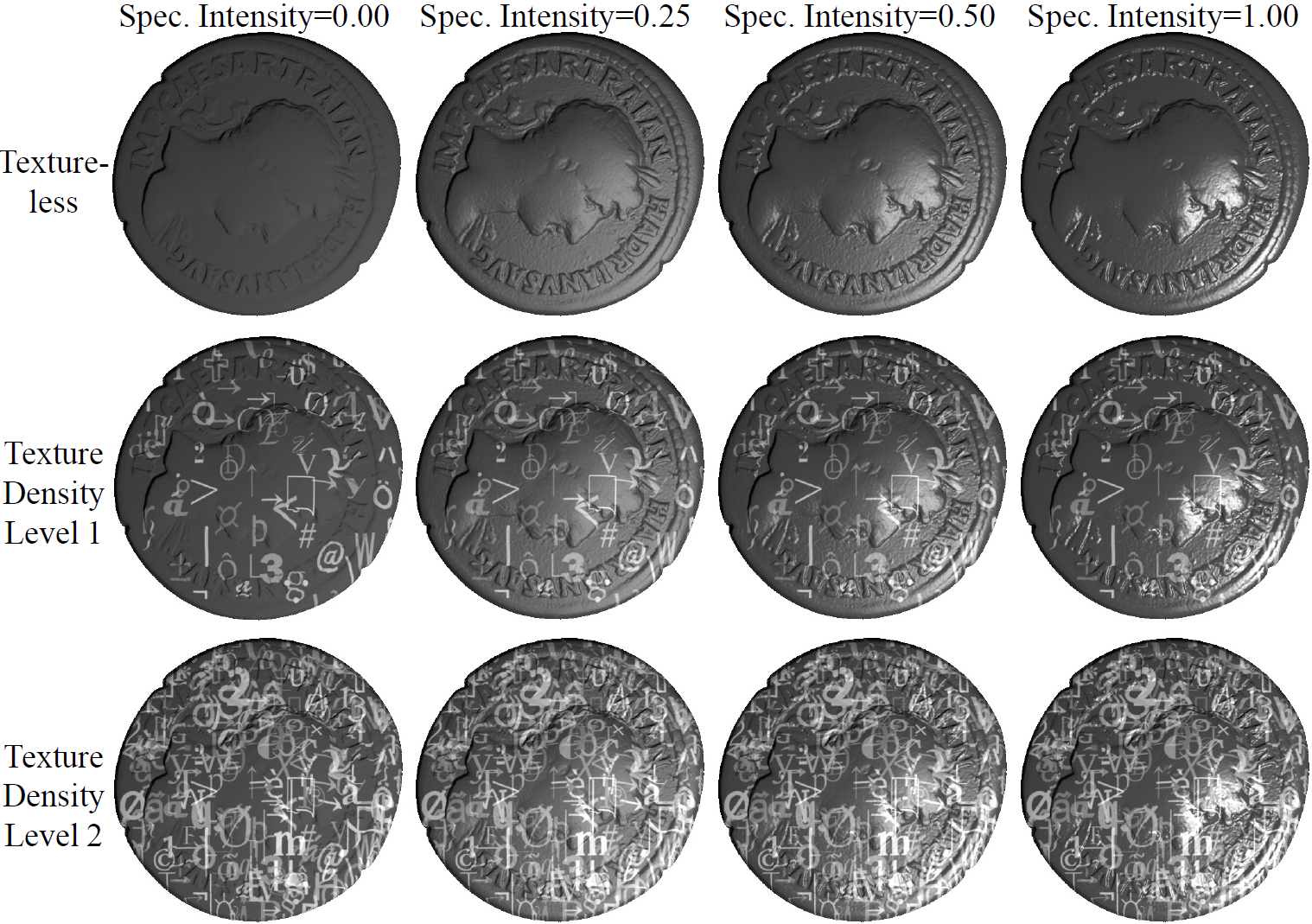

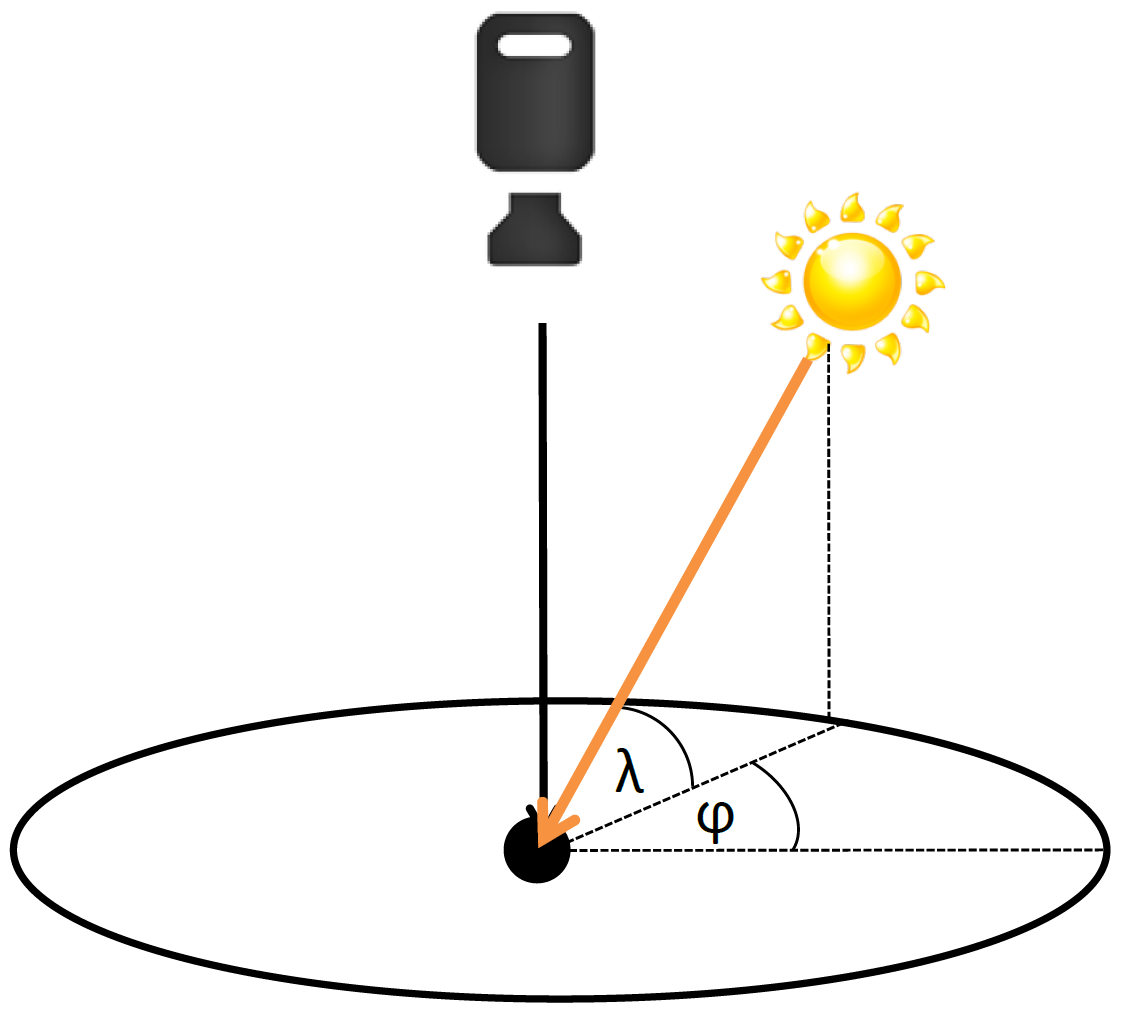

The full image dataset consists of images of 14 coin models which have been rendered using the open-source graphics software Blender. For each model, twelve sets of 500×500 images with 65 illumination directions were rendered where each set represents one out of four material BRDFs and one out of three texture density levels. Material BRDFs are intended to represent different levels of specularity starting from a Lambertian material with zero specularity up to specular intensity values of 0.25, 0.50 and 1.00. The first texture density level shows no texture and thus represents the set of textureless objects. For the remaining two levels synthetically generated textures were used. The camera image plane is placed parallel to the coin and light source positions are defined by their azimuth angle φ and elevation angle λ. We used eight levels of λ with eight levels of φ each to produce 64 images. The 65th image is rendered with the light placed at the camera position (i.e. λ=90°).

In the provided RAR-file, all the 65 images of a specific model, specularity level and texturedness level are contained in separate directories. For instance, the directory ‘texture_level0\Ref_level2\2874-back’ contains the images of the model ‘2874-back’ rendered without texture and a specularity of 0.50.

Patch Dataset

The patch dataset contains 50000 matching patch pairs for every of the 12 subsets of SIDIRE. It can be used to generate groups of feature distances by means of true and false patch pairs, in the same manner as, e.g., Matthew Brown’s patch dataset. Please see [1,2] for a detailed description of the evaluation scheme of patch pair databases.

The patches have a size of 64×64 and are arranged in images of size 3200×3200. Thus, every image contains 2500 patches where corresponding patches are placed side by side. The patches of the 12 subsets are contained in directories indicating their texture density and reflectance level, e.g. patches rendered without texture and a specularity of 0.50 are contained in the directory ‘tex0_ref2’.

Contact: Sebastian Zambanini

References

[1] Zambanini S., Kampel M. “Evaluation of Low-Level Image Representations for Illumination-Insensitive Recognition of Textureless Objects”, International Conference on Image Analysis and Processing – ICIAP’13, Naples, Italy, September 2013. (pdf, supplementary material)

[2] Brown, M., Gang Hua, Winder, S., “Discriminative Learning of Local Image Descriptors”, Pattern Analysis and Machine Intelligence, vol.33, no.1, pp.43-57, 2011.