Supervisor: Michael Reiter

Background

Flow Cytometry is a laser-based technique to measure antigen expression levels of blood cells. It is used in research as well as in daily clinical routines for immunophenotyping and for monitoring residual numbers of cancer cells during chemotherapy. One patient’s sample contains approximately 50-300k cells (also called events) with up to 15 different features (markers) measured. Each feature corresponds to either physical properties of a cell (cell size, granularity) or to the level of expression of a specific antigen marker on the cell’s surface.

Problem Statement

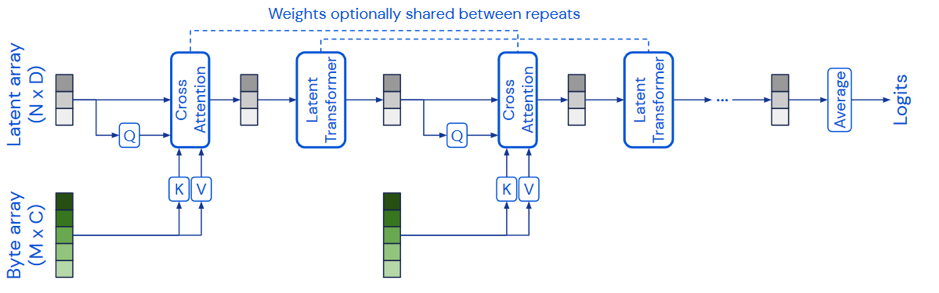

Powerful deep learning architectures such as set transformers1 have proven to be suitable for cell classification in high dimensional Flow Cytometry Data2. Standard transformer architectures are not suitable for this task since the memory and time consumption of self-attention increases quadratically with the input length, which is infeasible for Flow Cytometry samples with 300k events and more. Therefore, alternative architectures that approximate the self-attention mechanism and reduce the computational effort must be exploited. Wödlinger et al. demonstrated that set transformers are one suitable solution to this problem. However, other transformer variations such as Performer3, Reformer4, Perceiver5 or FNet6 are yet not considered.

Figure taken from Deep Minds Paper Perceiver: General Perception with Iterative Attention by Jaegle et al. https://arxiv.org/pdf/2103.03206.pdf

Figure taken from Deep Minds Paper Perceiver: General Perception with Iterative Attention by Jaegle et al. https://arxiv.org/pdf/2103.03206.pdf

Dataset

More than 600 different clinical samples of Acute Lymphoblastic Leukemia (ALL) patients are available from 3 different centers: St. Anna Hospital Vienna, Charité Berlin, and Garrahan Hospital Buenos Aires. With each sample containing roughly 200k events the overall data pool contains 650 x 2 * 10^5 = 130 million cells.

Goal

The goal of this work is to implement at least two of the above-described transformer variations and evaluate it against Wödlinger’s set transformer on the given dataset. Advantages and disadvantages as well as practical aspects of the different architectures should be compared.

Our project in the Media

Workflow

Literature research

Implementation in Python

Evaluation

Written report or thesis (in English) and final presentation

Requirements

Python

Basic knowledge in Deep Learning (PyTorch, Tensorflow) and Machine Learning