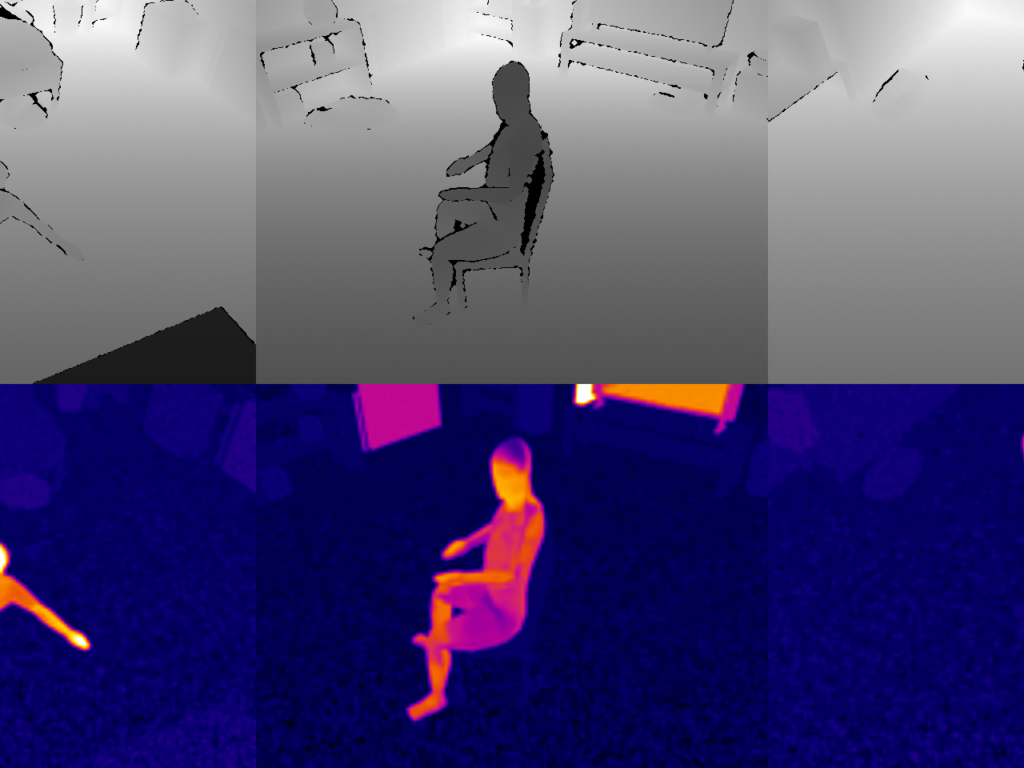

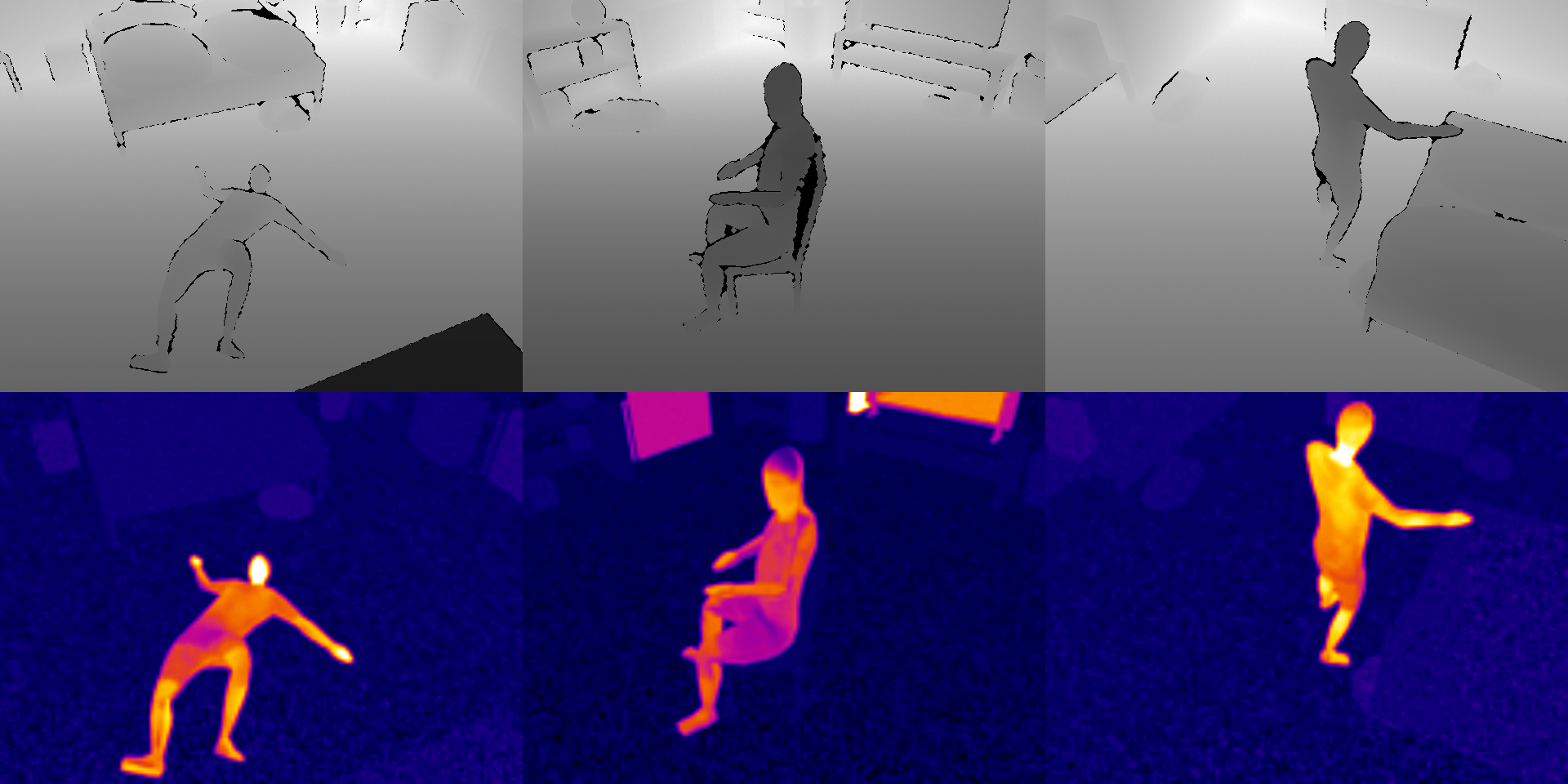

Synthetic Depth & Thermal (SDT) Dataset

Description

The Synthetic Depth & Thermal (SDT) dataset consists of 40k synthetic and 8k real depth and thermal stereo images, depicting human behavior in indoor environments. Included samples show uniquely posed lying, sitting and standing persons within four different room types (living room, bed room, bath room and kitchen), recorded from an elevated position. Furthermore, a fourth control class with empty rooms is provided as well. Both parts of SDT are balanced sets of these four classes and room types. The synthetic part of the dataset is intended to be used as training (and validation) data for uni-/multi-modal pose classification or person detection models, while the real part can be used to assess the generalization performance. To facilitate supervised training, pose labels and person bounding boxes are provided for all images. The real images in the dataset were captured by a multi-modal stereo camera system, consisting of an Orbbec Astra depth camera and a Flir Lepton 3.5 thermal camera, while synthetic images, which share the image characteristics of these cameras, were acquired through 3D rendering of virtual scenes within Blender and subsequent introduction of camera-specific noise.

Download and Use

The dataset is freely available for non-commerical research use. Please also cite our paper [1] when using the dataset for your research.

[1] Pramerdorfer C., Strohmayer J. and Kampel M., “Sdt: A Synthetic Multi-Modal Dataset For Person Detection And Pose Classification”, In the Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), October 2020, Abu Dhabi, United Arab Emirates, doi: 10.1109/ICIP40778.2020.9191284.